This article explores how we can navigate the complex terrain of LLMs while reaping the benefits they offer in a conscientious and ethical manner.

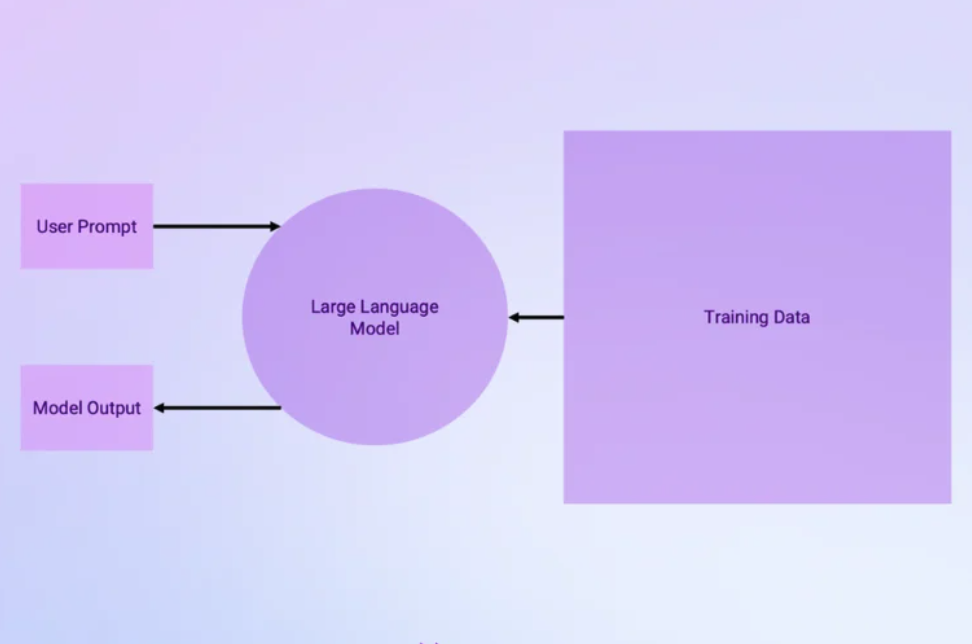

In recent years, large language models have revolutionized the field of Natural Language Understanding (NLU). These models, fueled by deep learning techniques, have displayed astonishing capabilities in generating human-like text. However, their sheer size and resource requirements have raised concerns about their efficiency and performance. At Allganize we believe It is essential to rethink the approach to large language models to strike a balance between power and practicality.

This article explores how we can navigate the complex terrain of LLMs while reaping the benefits they offer in a conscientious and ethical manner.

To enhance efficiency, researchers are exploring ways to optimize model architectures. Techniques like knowledge distillation, model pruning, and quantization have shown promising results in reducing the size and computational requirements of large models without sacrificing performance. By streamlining architectures, we can make these models more accessible and practical for a wide range of applications.

One size does not fit all when it comes to language models. By allowing users to customize and modularize models, we can tailor their functionality to specific use cases, resulting in more efficient and focused solutions. This approach not only reduces unnecessary computational overhead but also enables the deployment of models on resource-constrained devices.

Training large language models from scratch can be computationally expensive and time-consuming. However, by leveraging techniques like incremental learning and transfer learning, we can enhance efficiency. Incremental learning allows models to learn new tasks without forgetting previously acquired knowledge, while transfer learning enables the reuse of pre-trained models, significantly reducing training time and computational resources.

Efficiency gains can also be achieved by optimizing the hardware infrastructure supporting large language models. Specialized hardware accelerators, such as Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs), can significantly speed up model training and inference, making large language models more practical for real-time applications.

As large language models continue to evolve, it is crucial to prioritize efficiency and performance to unlock their full potential. By rethinking their architectures, enabling customization, leveraging incremental learning and transfer learning, and optimizing hardware, we can make these models more efficient, accessible, and practical for a wide range of NLU applications. At Allganize, we are committed to driving advancements in large language models that not only push the boundaries of AI but also make a positive impact on businesses and society as a whole.

Unlock new insights and opportunities with custom-built LLMs tailored to your business use case. Contact our team of experts for consultancy and development needs and take your business to the next level.